...over the period of the satellite record (1979-2012), both the surface and satellite observations produce linear temperature trends which are below 87 of the 90 climate models used in the comparison.Let's just do a quick fact check. First of all, he's wrong. Even in his wonky chart (scroll down the page to see it) it's only their own satellite record that's "below" "over the period of the satellite record". The surface temperatures don't drop below the bulk of the models until the tail end of the observations, from around 2008 onwards. So I looked more closely at his chart and what I noticed was:

- the different series of observations and models unusually all started at almost the same point and

- UAH diverged a lot from the surface temperatures.

Now that's odd, I thought. I've previously compared UAH and GISTemp and noted how similar they are over time. So how is it that they diverge so much in Roy's rendering?

Then I saw a question by Steven Mosher. He asked:

October 14, 2013 at 4:02 PMHi Roy,Sure enough, Roy's Y axis had the label: "Departure from 1979-83 average (deg C)"

whats the Y axis? departure from 1979-83 normal?

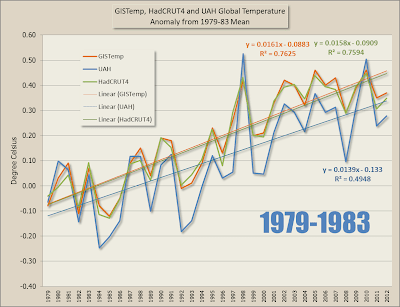

Now why would John Christy use such a short period of time as a baseline? The normal period used is 30 years. Four years is a very short period for a baseline. So I ran up a couple of charts and you can see the difference for yourself.

This first one shows UAH (lower troposphere) has higher and lower extremes than the surface temperature but they track each other fairly closely. This is using a 30 year baseline from 1981-2010. Take note of the fact that 1983, John's start year, lies above the trend. (Click to enlarge.)

This next chart is using John Christy's four year baseline 1979-1983. If you compare the top and next chart below you'll see why UAH diverges so much. The first three years of UAH are quite a bit above the surface temperature. They come together more from 1982 onwards. Using a four year baseline from 1979-83 shifts UAH down lower compared to the surface record. (Click to enlarge.)

Neat trick of Roy and John Christy? What do you think. Should we use that trick whenever we want to argue how different the satellite record of the lower troposphere is from the average global temperature of the land and sea surface?

I haven't plotted the model runs that Roy and John did, but I do have some questions for them. I'll show their chart first, then ask the questions. (Click to enlarge.)

I have

- Why did John Christy use a four year baseline period instead of a 30 year baseline as is usual?

- How did John Christy get all the CMIP5 models to start at exactly the same zero degree anomaly point?

Why did they start at 1983 instead of 1979, which is when UAH started and when their baseline period started?Oh, that's because they used five year running means - see next point.- Why did they use running five year means instead of average annual?

Now let's see how the models are reported in the IPCC report. (Click to enlarge.)

|

| From TFE.3, Figure 1: IPCC WG1 Technical Summary (page TS-96) |

I must say that John Christy messed up his model runs, comparing John's chart to the IPCC chart above. Unlike in John's chart, there is no point at which the models all converge in the IPCC chart. As well as that, while the observations are at the low end of the models right now, that wasn't the case back in the 1980s and 90s. John Christy's chart shows UAH diverging from the get go way back in 1983.

Later addition: --o--The fact that 1983 lies above the trend line (see top chart above) probably means that John's model runs, which start at zero in 1983, are too high. It's the same problem as discussed elsewhere when people were looking at an early draft of the IPCC report.--o--

Shall we just assume that John Christy and Roy Spencer are having a little fun at the expense of Anthony Watts and other science deniers? Anthony Watts reposted Roy's article at WUWT and he didn't have any notice to indicate he knew it was a joke. In fact Anthony seems to think Roy is serious. He writes:

Reality wins, it seems. Dr Roy Spencer writes:Well, Anthony it's not reality. It's just a more mischief from John Christy and Roy Spencer. It gives Wondering Willis' a chance for payback if he cares to take it.

From the WUWT comments

This article has brought out the lowest common denominator of denier at WUWT. There is almost no-one querying the chart or making a useful comment. It's just chanting of "all the models are wrong", "scientists don't know nuffin'" and "it's not CO2". And Anthony boasts about his website? Seriously? Click for archived WUWT version with comments.

JustAnotherPoster says something rather garbled. In between his "all the models are wrong" chant, something went wrong between the keyboard and brain?

October 14, 2013 at 2:15 pm

As RJB would state. The really clever work now would be to bin all the model that are failures and investigate why the two at the bottom have matched reality and what they have assumed compared with the failed ones.

That’s the Potential published paper work What have the model winners assumed that the model failures haven’t

magicjava asks a good question:

October 14, 2013 at 3:42 pm

Just a quick question. Why are temperatures given as a 5 year mean? Why not plot the actual temperature?

Jimbo is not at all sceptical of John Christy's chart and says:

October 14, 2013 at 3:43 pm

There are 3 words that Warmists hate to see in the same paragraph. These 3 words can cause intolerable mental conflict.

*Projections, *observations, *comparisons.

At one of the IPCC insiders’ meetings they knew full well that there was a problem. Some bright spark must have suggested that they simple pluck a new confidence number out of thin air otherwise they would be doomed (and shamed). Desperate times call for desperate measures. Just look at the graph. You won’t see this kind of behavior in any other science.

shenanigans24 sees what he wants to see and doesn't question anything either:

October 14, 2013 at 4:02 pm

I would say 100% are wrong. The fact that 2 or 3 haven’t overshot the temperature doesn’t make them right. They aren’t following the observed temperature. It’s clear that none of them simulate the actual climate.

richardscourtney says:

October 14, 2013 at 4:21 pm

Friends:

Some here seem to think rejection of the models which are clearly wrong would leave models which are right or have some property which provides forecasting skill and, therefore, merits investigation. Not so. To understand why this idea is an error google for Texas Sharp Shooter fallacy.

Models which have failed to correctly forecast are observed to be inadequate at forecasting. Those two (or three?) which remain are not known to be able to forecast the future from now. One or more of them may be able to do that but it cannot be known if this is true.

Richard

John Franco hasn't yet woken up to the fact that deniers can change their tune on a whim. He's looking for some denialist consistency and can't find any at WUWT. He says:

October 14, 2013 at 4:47 pm

I’m still confused why we care about HADCRUT. I thought WUWT demonstrated that half the “warming” came from bad ground stations and other fudge factors. I also thought another article on WUWT demonstrated that that HADCRUT takes advantage of some bad mathmatics to suppress temperatures early than 1960, especially the high temps of the 1940s.

Unlike most deniers who say it's all too complex, Latitude reckons climate is very simple and says:

October 14, 2013 at 5:02 pm

Richard, I see it as simply not willing to admit that CO2 isn’t as powerful as they want it to be….

covering it up and justifying it with “aerosols” etc….

That way they can still blame it all on CO2

I'm not familiar with the topic as much as I should be, but I thought the models were carried out with different scenarios (the Representative Concentration Pathways or RCPs). I think it's very useful to compare model prediction to empirical data, but the model should be run using a realistic set of parameters once it goes from match to prediction mode. Because the scenarios are quite different, wouldn't it be better to run the comparisons using actual conditions rather than whatever was assumed during a model comparison exercise? I emphasize this point because the assumed parameters tend to deviate from reality as time goes by. This is to be expected because there are so many uncertainties in human behavior as well as natural phenomena.

ReplyDeleteGood point, Fernando. Roy Spencer doesn't say what pathway was used by John Christy in his chart.

DeleteThe main point though is the trick they used to get UAH too low. It's only UAH that's below most of the model runs for the period. Surface temps aren't nearly as different. And UAH is Roy and John's baby!

The other problem to my way of thinking (because it's over such a short period of time) is that John seems to somehow have aligned all the models to a single zero temperature data point in 1983, which is not how I understand the models to work. I could be wrong there, but the 1983 temp was above the trend, so that could mean that he's aligned the models too high. Same as was discussed with an early draft IPCC report. (I've added a bit to the main article in that regard.)

http://blog.hotwhopper.com/2013/10/steve-mcintyre-and-anthony-watts-fail.html

So they show 90 different models, but how many runs did they do for each model? What is the spread of each model?

ReplyDeleteAre there 90 different models, Lars? That seems an awful lot of models. Maybe Roy Spencer meant 90 different model runs from a smaller number of models.

DeleteJohn Christy just used data from the KNMI Climate Explorer. He didn't run any models himself.

I suppose one can run the same model under different conditions (e.g. RCPs), and then one might get 90 different combinations.

DeleteIt's another exercise in deliberate misleading with graphs which Christy has been doing for years with tropical tropospheric temperature - we all remember the most recent outing as Sou covered it here.

ReplyDeleteAll involved in creating and promoting this graph stand revealed as either calculatingly dishonest or gullible and naive.

Don't attribute to stupidity what you can attribute to malice.

Delete" Ph.D. Atmospheric Science, University of Illinois (1987).

M.S., Atmospheric Science, University of Illinois, (1984)."

According to http://www.desmogblog.com/john-christy .

You may safely assume the guy knows better and is a paid merchant of ignorance. Not steering clear of Heartland clinches the verdict.

I had hoped I was clear on this point: I know Christy and Spencer are aware of what they are doing and are fully culpable. AW is the stupid and gullible one here. Not to mention the bovids in comments there.

DeleteClear BBD, though imo AW is a malicious bully. And a stupid one at that.

DeleteNot arguing with that...

Delete;-)

If it is a 5 year running mean how does it go up to 2012?

ReplyDeleteUnless each datapoint is the average of the 5 prior years (?) <-- a judith curry question mark.

If it is the average of the last prior 5 years that would mean the observations are effectively shifted to the right by about 2.5 years relative to the models (unless the models are plotted in the same way?). So not only are the observations shifted too low, they may have been shifted to the right too.

The baseline Christy used 1979-1983 is a 5 year period, it includes 79,80,81,82 and 83. It's basically the first point on his running 5 year mean. Of course that ISNT the 5 year average centered on 1983. It's the average centered on 81. So Christy's graph is shifted 2 years to the right.

DeleteI've hacked together a "correction" (it still uses his dumb baseline) by overlaying the actual 5 year running mean calculated on woodfortrees. As you can see his mistake surprisingly works to the advantage of a certain agenda!

http://i39.tinypic.com/wt9hzo.jpg

http://i39.tinypic.com/wt9hzo.jpg

It would be interesting if someone did the job right to see just how wrong Christy's graph is.

Oh realclimate have already done that.

http://www.realclimate.org/index.php/archives/2013/02/2012-updates-to-model-observation-comparions/

KR

DeleteMight that also apply to the model data? With the 1983 data actually being the 1981 average, and all model data shifted over by 2 years?

One other trick played in the Spencer/Christy graph is to start all of the models from the same point. That's not what is done in practice - they are run-up over a period of time and and have a distribution along the entire period, as shown on RealClimate. By "pinching" all of the model runs to 1983 they have artificially reduced the model spread at the beginning of the figure, effectively (and deceptively) reducing model variance over that period.

KR

DeleteI might well have missed it, but I don't recall there being a debunk of the original Christy confusion graph where he plotted CMIP5 runs against "observations" of averaged satellite MT data sets (HotWhopper post here).

He seems to be getting rather too adventurous with this trickery - perhaps a comprehensive reference debunk would be timely if one does not already exist?

KR

DeleteBBD - I believe I've entirely lost track of how many deceptive graphs Christy (and rather secondarily Spencer - most appear to originate with Christy) have come and gone.

A few that stick in mind:

* Plotting TMax records for US reference stations, completely neglecting that the likelihood of a record drops with 1/n observations - thus presenting graphs with apparent decreases over time. The appropriate correction for observation length (cancelling it out) is to look at the _ratio_ of low and high records, as per Meehl et al 2009 - and that shows the increases over recent decades.

* The CMIP5 versus satellite graph - where again the baseline is the 1979-1983 mean, the extrema in the early satellite record (in that case shifted two years earlier, as opposed to the current graph where he's shifted everything two years later).

* Reproducing (accurately, mind you) winter snow extent records (fairly level) for the US and presenting them as the entire story - when spring and summer records, and in fact the yearly records, all show statistically significant decreases.

Bad graph construction, presenting partial and uncorrected data, claiming inaccuracies in models and weather records while failing to acknowledge the possibilities of errors and inaccuracies in his own temperature data (Po-Chedley et al 2011) - what they present in support of their ideology appears to be far divorced from reality...