Over the years, scientists in different parts of the world have worked hard to get a more accurate picture of the change in global surface temperature over time. This is slow painstaking work. Initially it would have meant working with written records, with people trying to decipher handwriting of the tens of thousands of people who wrote down readings of temperature and rainfall, and other weather indicators, from all the weather stations around the world. Over time the data was digitised - another extremely laborious task.

I'm not going to write about all that's been done. It's a mammoth ongoing effort involving people from all around the world. What I'm writing about are the ignorant scoffers. You know the people I mean. The ones who sit at their keyboards all day to snipe at the work done by scientists.

Anthony Watts has put up three articles from one chap who's been looking to see the extent of this careful work, as measured by adjustments to the original data. He's only looked at two data sets. One which is used by NASA and NOAA for global land surface temperatures. The Global Historical Climatology Network Data or GHCN. This was first developed in the early 1990s, with the current version 3 released in 2011. The other is the United States Historical Climatology Network (USHCN), which is the high quality dataset used by NOAA for USA temperatures since 1987.

The Global Historical Climatology Network

NASA and NOAA use GHCN-M for the land component of the GISS Surface Temperature Analysis (GISTEMP). The M stands for "monthly".

This year, NOAA announced that it will be changing to using a broader set of data, roughly doubling the number of stations. Version 4 of Global Historical Climatology Network Monthly (GHCN-M) v4. will be using data from the International Surface Temperature Initiative (ISTI).

From the NOAA website:

The Global Land Surface Temperature Databank contains monthly timescale mean, maximum, and minimum temperature for approximately 40,000 stations globally. It was developed as part of the International Surface Temperature Initiative. This is the global repository for all monthly timescale land surface observations from the 1800s to present and uses data deriving from sub-daily, daily, and monthly observations. It brings together data from more than 45 sources to create a single merged dataset. It will be used in the creation of various integrated global temperature resources, most notably Global Historical Climatology Network Monthly (GHCN-M) v4.

I expect that will keep deniers at WUWT quite busy, adding up all the corrections to raw data over time for 40,000 stations.

I don't know how many person hours have gone into developing ISTI, but it's fair to say that it will be orders of magnitude more than goes into maintaining a denier blog like WUWT.

Quality assurance and homogenisation

Below are just the quality assurance steps that are taken to prepare the monthly GHCN data, which you wouldn't know about if you only read denier blogs. There is more detail here. (Most deniers don't want any quality assurance. They call it "fudging".)

Then there are the homogeneity adjustments. This is described by NOAA as follows:

Many surface weather stations undergo minor relocations through their history of observation. Stations may also be subject to changes in instrumentation as measurement technology evolves. Further, the land use/land cover in the vicinity of an observing site may also change with time. Such modifications to an observing site have the potential to alter a thermometer's microclimate exposure characteristics and/or change the bias of measurements, the impact of which can be a systematic shift in the mean level of temperature readings that is unrelated to true climate variations. The process of removing such "non-climatic" artifacts in a climate time series is called homogenization.

In version 3 of the GHCN-Monthly temperature data, the apparent impacts of documented and undocumented inhomogeneities are detected and removed through automated pairwise comparisons of mean monthly temperature series as detailed in Menne and Williams [2009]. In this approach, comparisons are made between numerous combinations of temperature series in a region to identify cases in which there is an abrupt shift in one station series relative to many others. The algorithm starts by forming a large number of pairwise difference series between serial monthly temperature values from a region. Each difference series is then statistically evaluated for abrupt shifts, and the station series responsible for a particular break is identified in an automated and reproducible way. After all of the shifts that are detectable by the algorithm are attributed to the appropriate station within the network, an adjustment is made for each target shift. Adjustments are determined by estimating the magnitude of change in pairwise difference series form between the target series and highly correlated neighboring series that have no apparent shifts at the same time as the target.

USA - Historical Climatological Network and the Climate Reference Network

For the US only, NOAA uses the U.S. Historical Climatological Network (USHCN). This is different to the finished data used in the global composite, the GHCN. In the latest WUWT article about the proportion of data changed (archived here), John Goetz wrote about the proportion of records that have been amended in the USHCN, which would be similar to the changes described above except that the UHCN fills in data gaps, which the GHCN doesn't.

In the USA, there is also a new network of weather stations, U.S. Climate Reference Network (USCRN) which was developed as a check on the broader network. Unlike most of the weather stations, all of it is maintained by NOAA rather than volunteers, and the stations have been placed in locations where the surrounds are not expected to change, so it is considered pristine. You can see a description of a CRN weather station here.

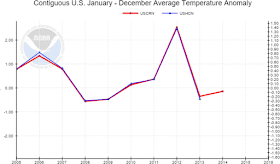

Importantly, the data is virtually indistinguishable from the USHCN as can be seen in the charts below.

First the monthly comparisons (click to enlarge):

Then the annual comparisons:

At the bottom of the latest WUWT article Anthony Watts wrote:

Of course Tom Karl and Tom Peterson of NOAA/NCDC (now NCEI) never let this USCRN data see the light of day in a public press release or a State of the Climate report for media consumption, it is relegated to a backroom of their website mission and never mentioned. When it comes to claims about hottest year/month/day ever, instead, the highly adjusted, highly uncertain USHCN/GHCN data is what the public sees in these regular communications.

One wonders why NOAA NCDC/NCEI spent millions of dollars to create a state of the art climate network for the United States, and then never uses it to inform the public. Perhaps it might be because it doesn’t give the result they want? – Anthony Watts

Anthony is wrong on almost all counts.

- The CRN data only goes back to 2006, so it's not of any use in State of the Climate Reports, which compare changes going back decades.

- There is nothing in particular to report in a press release. The CRN data is virtually indistinguishable from USHCN data.

- These charts and the underlying data do see the light of day. They are on the NOAA website, for all the world to see.

- When it comes to claims of the hottest year/month/day ever, the charts show that USHCN and USCRN are virtually identical. On an annual basis in 2012 and 2013, USCRN is ever so slightly hotter than USHCN!

Looking at the charts above, you can see why Anthony keeps his mutters to his blog, and doesn't back up his comment with data. That's typical of deniers. You can readily see the conspiratorial thinking that Anthony is indulging in, too, with his "something must be wrong" (though it's not) and "nefarious intent" comment.

It took Anthony a long time to wake up to the fact that the National Climatic Data Centre is no longer, and it's now been merged into the National Centers for Environmental Information (NCEI). He's out of the loop on a lot of things. He'll be pushing to get Tom Peterson, President of the WMO Commission for Climatology, to take any notice of Anthony's NOAA nonsense these days. (See here and here.)

US temperatures over time

To complete, here is a chart of decadal temperatures for the contiguous USA from 1895 to 2013 (the latest available for some reason).

|

| Data source: NOAA |

From the WUWT comments

George E. Smith doesn't know anything about US temperatures, or quality assurance, or homogenisation, or statistics, but he knows what conspiracy theories he likes:

September 27, 2015 at 11:02 am

Is any of this behavior considered criminal, or fraudulent, or deceptive, or misleading, or argumentative, or speculative, or even immoral ??

One advantage of doing statistical mathematics (pure fiction) rather than real science, is that there is a built in pre-supposition that your results automatically incorporate uncertainty.

Well actually, your results are never uncertain, if you know how to do 4-H club arithmetic correctly.

The uncertainty rests entirely on what you claim your results mean; all of which is entirely in your head.

Mike Smith reckons nine years of data is all he needs, though he probably doesn't even know how to analyse that. Nor does he know that CRN data is almost identical to the US historical network data.

September 27, 2015 at 11:06 am

USCRN and the satellite data both show a complete lack of warming. Those are the best data sources we have, by far.

The unadjusted surface temperature record shows no warming.

The conclusion is clear: global warming is caused exclusively by an artifact of some highly questionable statistical adjustments.

Wojciech Peszko is clued up, and points out the two data sets have the same result. Hardly anyone takes any notice:

September 27, 2015 at 11:29 am

Adjusted US surface data also show no or little warming, so they’re in agreement.

The conculsion is clear: we can’t say whether the long term trend stopped or not.

markstoval is a WUWT regular, who usually spouts conspiracy theories like this:

September 27, 2015 at 11:31 am

This post clear shows that the so-called data sets are not data at all. The “data” set is false and is just made up. One wonders why there is not one single main stream investigative reporter willing to write a story on this issue. Not one.

Zeke Hausfather points out a couple of things wrong in the article, with charts. (Follow the link to see his full comment.)

September 27, 2015 at 11:32 am

This article gets a few things wrong. First, the effect of TOBs adjustments to U.S. temperature data is larger than those of pair-wise homogenization (~0.25 C per century vs. 0.2 C).

Danny Thomas asks Zeke a question:

September 27, 2015 at 11:55 am

Mr. Hausfather,

Thank you for the input and links.

Is it in no way bothersome to discover that some 92% of the data is adjusted? I’ve seen yours and Mosher’s discussions in the past but still find that it heightens my sense of concern that so much data gathering is considered to be so invalid in supposedly an improving state of the art system of instrumentation.

Being the consumer of said system, I’d have no choice but to ask for a refund or substantial discount.

Zeke Hausfather explains that back in the day, Grover Cleveland didn't foresee the eruption of conspiracy theory blogs like WUWT. He had other things on his mind. Otherwise he most certainly would have bowed to the collective wisdom of deniers and set up a department to set up a climate reference network back in 1895 (not really) - (my emphasis)

September 27, 2015 at 12:28 pm

Hi Danny,

Unfortunately USHCN is not by any measure a “state of the art” system; we didn’t have the foresight to set up a climate monitoring system back in 1895, rather we are relying on mostly volunteer-manned weather stations that have changed their time of observation, instrument type, and location multiple times in the last 120 years. There are virtually no U.S. stations that have remained completely unchanged for the past century. The whole point of adjustments are to try and correct for these inhomogenities.

The reason why adjustments are on balance not trend-neutral is that the two big disruptions of the U.S. temperature network (time of observation changes and conversion from liquid-in-glass to electronic MMTS instruments) both introduce strong cooling biases into the raw data, as I discuss here (with links to various peer-reviewed papers on each bias): http://berkeleyearth.org/understanding-adjustments-temperature-data/

I also did a synthetic test of TOBs biases using hourly CRN data that might be of interest: https://archive.is/9Zayx [replaced link - Sou]

The new USCRN station is a state of the art climate monitoring system. So far its (unadjusted) results agree quite well with the (adjusted) USHCN data, which is encouraging. Going forward it will provide a useful check (and potential validation) of the NOAA homogenization approach.

Steven Mosher also points out that USHCN is the same as USCRN:

September 27, 2015 at 5:25 pm (excerpt)

If USCRN is the gold standand..

Then if adjustments to USHCN, result in USCHN matching USCRN

What can one conclude about adjustments and infilling?

AndyG55 is not impressed by the fact there's no difference, and talks about someone called "nobody", who is impressed:

September 27, 2015 at 6:39 pm

You are trying to sell a LEMON, Mr car saleman !

And nobody is buying it. !!

Further reading

I'll just post some links. First a link to Victor Venema's articles on homogenization. There are lots more articles on the internet as well. Do a google search for Zeke Hausfather. Visit Nick Stokes blog. Go to SkepticalScience.com. And read the FAQs and other information on sites like NASA's GISS, NOAA, BoM etc. Or use the search bar up top for other HotWhopper articles on the subject (which I mostly spell homogenisation with an 's').

I have been putting in my two bobs worth on these articles (in between getting sniped at from the usual suspects). Some of the comments have been excellent.

ReplyDeleteIt is still a red-herring of course. The implication is the adjustments in an adjusted data set are somehow an attempt to mislead the United States public.

Whether by design or not, some are pushing the 'idea' that thermometer measurements are being adjusted. It is bollocks of course, the unadjusted data sets are (mostly) free from homogenization. I have seen a similar idea being pushed on the JoNova website was well.

DeleteSo Anthony avoids mention of his own 'gamechanging' reanalysis of temperature data and the winged monkeys dutifully neglect to ask why.

ReplyDeleteYes, Millicent. WUWT-ers are nothing if not docile and compliant.

DeleteIt's now more than three years since his breathless announcement that was going to change the face of climate science forever, or something. Either he can't get the data to refute his previous paper after all, or he can't explain any mechanism, or he can't get anyone to rescue the paper for him this time around, or he can't get his secretive open society organised well enough to publish it (or any other journal).

Thanks for bringing some sanity to this issue, Sou. Your post brought back a lot of fond memories from my time at NCDC building GHCN-M version 2, including searching out European archives of Colonial Era data from around the world with the late, great, Prof. John Griffiths, and digitizing them to increase our global coverage in the early years of the data record. E.g., http://www.ncdc.noaa.gov/oa/climate/research/ghcn/colonialarchive.html and http://www.ncdc.noaa.gov/img/col.gif

ReplyDeleteBut the most relevant memory was when a State Climatologist visited for a couple weeks and argued that we were biasing his state's climate record because our USHCN station adjustments created more warming than cooling while he assumed that they should be random. This was back in the day when station history files were only available in filing cabinets in our basement. So we agreed to evaluate the 10 largest adjustments for stations in his state. It turned out that 8 of the 10 were for station moves from city centers to sewage treatment plants. That's when he realized that systematic adjustments were in response to systematic changes in the observing system.

Thanks for the anecdote, Tom. The reality of all the solid work you and others have put in over the years should shame Anthony Watts and his deniers. (If they were capable of the emotion.)

DeleteThe move from city rooftops to wastewater treatment plants and airports is a particularly interesting one. It causes some weirdly low century-scale urban warming trends if you mistakenly look at the raw data.

DeleteBy the way, thanks for all your hard work on temperature data over the years Tom. Hope you are enjoying a well-deserved relaxing retirement :-)

It's posts like this on WUWT that I find particularly sad:

ReplyDelete"katherine009

September 27, 2015 at 12:56 pm

Some days, I am so disheartened. I am seriously worried for the future my kids (and hopefully grandkids) will have. Energy poverty, destroyed economies, all to satisfy what? It’s madness. I’m doing my best to enlighten others, but my command of all the facts is limited. I read here daily, educating myself and hopefully others. Thank you Mister Watts."

The poor dear seriously thinks she is "educating herself and hopefully others" by swallowing and regurgitating the pseudoscience nonsense on WUWT.

It reads to me like a typical denier sockpuppet post. Utterly fake and intended to try and impart some credibility to WUWT in the eyes of any naive visitor to the site.

DeleteDefinitely sockpuppetry here. katherine009 first appears fully-fledged on June 6 and dives right into many of the usual themes.

DeleteGoogle katherine009 says: and site:wattsupwiththat.com

I wonder who he is. dbstealey? Bob Tisdale? Poptech? Steve McIntyre? They've all used sockpuppets :)

DeleteSou -

DeleteWhen did Stevie-Mac use a sockpuppet?

Using that Google search, Katherine009 goes back years before June. Its hard to reliably identify a denier sockpuppet because these people do not behave as 'normal' people do, so I could well be wrong.

DeleteAnd if I might answer Joshua's question: McIntyre was caught using "Nigel Persaud" to wax lyrical about his own work. Googling on that name plus McIntyre should lead you to the whole sorry story.

@ Millicent, you have to be careful. I first looked in vain for katherine009 on some of the early search results from "katherine009", then checked Google's cached page from the search and found the name in the Recent Posts sidebar, referencing much newer comments dating from when Google last captured the page. My revised search used the string "katherine009 says:" which excluded those false hits.

DeleteAh sorry, I am not a skilled Googler, and being careful about anything is not a habit I have picked up :)

DeleteLooking through the katherine009 posts does seem to reinforce the sockpuppet impression. There was also "asybot" whose rather ill thought out comment informed us that he was pleased his parents were dead as that was (apparently) preferable to them listening to Pope Francis. Who would have thought that sockpuppets could be so cold hearted.

DeleteGoogle Nigel Persaud for Stevie Mac's sockpuppet.

DeleteWay back when, Eli pointed out to Willard Tony that the USCRN was Tom Karl's trick to validate the USHCN and the homogeneity adjustments. Agreement going forward would show that the older data from the USHCN was properly treated.

ReplyDeleteWithout time machines this is about the best that could be done. An excellent example of perceptive experimental design

What a sneaky thing to do :)

DeleteAnthony's next but one paper will probably be how the Climate Reference Network data has been manipulated to make it seem like it's getting hotter.

To be fair, you can't actually use USCRN to differentiate raw and adjusted USHCN data quite yet; the overlap is short enough and the nature of the pairwise homogenization algorithm tends to limit the amount of adjustments detected in the recent past (since it prefers having a length of time preceding the following the breakpoint to determine the correction needed).

DeleteYou cannot differentiate between raw and adjusted, but you can already see that the temperature trend in USHCN over the last century is not just due to urbanization. A difference of 0.1°C would be visible because both networks measure the same region.

DeleteYou are right that the last part of the series is least reliable because it is hard to detect inhomogeneities near the end. You detect them by comparing the mean temperature before and after a potential break. You need years of data to get a reliable mean and thus too see breaks. The NOAA Pairwise Homogenization Algorithm even has an explicit limit and will not set any breaks in the last 18 months.